Well, not exactly through her tongue, but the device in her mouth sent visual input through her tongue in much the same way that seeing individuals receive visual input through the eyes. In both cases, the initial sensory input mechanism -- the tongue or the eyes -- sends the visual data to the brain, where that data is processed and interpreted to form images. What we're talking about here is electrotactile stimulation for sensory augmentation or substitution, an area of study that involves using encoded electric current to represent sensory information -- information that a person cannot receive through the traditional channel -- and applying that current to the skin, which sends the information to the brain. The brain then learns to interpret that sensory information as if it were being sent through the traditional channel for such data. In the 1960s and '70s, this process was the subject of ground-breaking research in sensory substitution at the Smith-Kettlewell Institute led by Paul Bach-y-Rita, MD, Professor of Orthopedics and Rehabilitation and Biomedical Engineering at the University of Wisconsin, Madison. Now it's the basis for Wicab's BrainPort technology (Dr. Bach-y-Rita is also Chief Scientist and Chairman of the Board of Wicab).

You astonish how it happens

The multiple channels that carry sensory information to the brain, from the eyes, ears and skin, for instance, are set up in a similar manner to perform similar activities. All sensory information sent to the brain is carried by nerve fibers in the form of patterns of impulses, and the impulses end up in the different sensory centers of the brain for interpretation. To substitute one sensory input channel for another, you need to correctly encode the nerve signals for the sensory event and send them to the brain through the alternate channel. The brain appears to be flexible when it comes to interpreting sensory input. You can train it to read input from, say, the tactile channel, as visual or balance information, and to act on it accordingly. In JS Online's "Device may be new pathway to the brain," University of Wisconsin biomedical engineer and BrainPort technology co-inventor Mitch Tyler states, "It's a great mystery as to how that process takes place, but the brain can do it if you give it the right information.

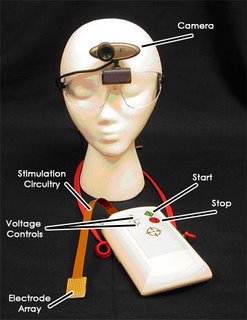

The concepts at work behind electrotactile stimulation for sensory substitution are complex, and the mechanics of implementation are no less so. The idea is to communicate non-tactile information via electrical stimulation of the sense of touch. In practice, this typically means that an array of electrodes receiving input from a non-tactile

information source (a camera, for instance) applies small, controlled, painless currents (some subjects report it feeling something like soda bubbles) to the skin at precise locations according to an encoded pattern. The encoding of the electrical pattern essentially attempts to mimic the input that would normally be received by the non-functioning sense. So patterns of light picked up by a camera to form an image, replacing the perception of the eyes, are converted into electrical pulses that represent those patterns of light. When the encoded pulses are applied to the skin, the skin is actually receiving image data. According to Dr. Kurt Kaczmarek, BrainPort technology co-inventor and Senior Scientist with the University of Wisconsin Department of Orthopedics and Rehabilitation Medicine, what happens next is that "the electric field thus generated in subcutaneous tissue directly excites the afferent nerve fibers responsible for normal, mechanical touch sensations." Those nerve fibers forward their image-encoded touch signals to the tactile-sensory area of the cerebral cortex, the parietal lobe.

information source (a camera, for instance) applies small, controlled, painless currents (some subjects report it feeling something like soda bubbles) to the skin at precise locations according to an encoded pattern. The encoding of the electrical pattern essentially attempts to mimic the input that would normally be received by the non-functioning sense. So patterns of light picked up by a camera to form an image, replacing the perception of the eyes, are converted into electrical pulses that represent those patterns of light. When the encoded pulses are applied to the skin, the skin is actually receiving image data. According to Dr. Kurt Kaczmarek, BrainPort technology co-inventor and Senior Scientist with the University of Wisconsin Department of Orthopedics and Rehabilitation Medicine, what happens next is that "the electric field thus generated in subcutaneous tissue directly excites the afferent nerve fibers responsible for normal, mechanical touch sensations." Those nerve fibers forward their image-encoded touch signals to the tactile-sensory area of the cerebral cortex, the parietal lobe.After training in laboratory tests, blind subjects were able to perceive visual traits like looming, depth, perspective, size and shape. The subjects could still feel the pulses on their tongue, but they could also perceive images generated from those pulses by their brain. The subjects perceived the objects as "out there" in front of them, separate from their own bodies. They could perceive and identify letters of the alphabet. In one case, when blind mountain climber Erik Weihenmayer was testing out the device, he was able to locate his wife in a forest. One of the most common questions at this point is, "Are they really seeing?" That all depends on how you define vision. If seeing means you can identify the letter "T" somewhere outside yourself, sense when that "T" is getting larger, smaller, changing orientation or moving farther away from your own body, then they're really seeing. One study that conducted PET brain scans of congenitally blind people while they were using the BrainPort vision device found that after several sessions with BrainPort, the vision centers of the subjects' brains lit up when visual information was sent to the brain through the tongue. If "seeing" means there's activity in the vision center of the cerebral cortex, then the blind subjects are really seeing.

The BrainPort test results are somewhat astonishing and lead many to wonder about the scope of applications for the technology. In the next section, we'll see which BrainPort applications Wicab is currently focusing on in clinical trials, what other applications it foresees for the technology and how close it is to commercially launching a consumer-friendly version of the device.

No comments:

Post a Comment