Tuesday, August 22, 2006

Amazing use of Technology

NFC wirelessly links a glucometer and an insulin pump. The glucometer records the blood sugar reading and then recommends a bolus dose of insulin. If the patient accepts the dose, then they simply swipe the glucometer against the insulin pump, which could be located beneath clothing, and the drug is delivered.

This is amazing for several reasons. First and foremost, the patient is in on the decision. And right up there next is where else can it be used? Cambridge lists pain, asthma, respiratory care, gastric electrical stimulation therapy, and congestive heart failure and urinary urge incontinence treatment.

Relying on the inherent nature of NFC and its working range of just 10 cm, a user must intentionally bring NFC devices into close proximity to make a connection, transfer information and then trigger the process.

Yes, I know that the hundreds of products and apps introduced daily are important too, but it's especially cool to see technology used in unique and life-improving ways.

Near Field Communication - NFC a new standard

More than just a wireless connection, NFC is positioned as a basic tool that allows customers to interact intuitively with an increasingly electronic environment. However, the near-term success of NFC is challenging to predict as a more complete customer understanding of NFC, its possibilities, and its limitations is required.

What is NFC?

Evolving from a combination of contactless, identification and networking technologies, NFC is a short-range wireless connectivity standard. With the increased adoption of RFID contactless smart-cards to support a broad range of applications, such as access, payment, and ticketing, and the commercial availability of NFC-enabled devices such as cell phones from Nokia, the convergence of NFC with RFID is gaining interest.

Pioneered by Philips and jointly developed with Sony, the NFC standard specifies a way for cells phones, PDAs, and other wireless devices to establish a peer-to-peer (P2P) network. After the P2P network has been configured with NFC, another wireless communication technology, such as Bluetooth or Wi-Fi, can be used for longer-range communication or for transferring larger amounts of data.

NFC enables electronic devices to exchange information and initiate applications automatically when they are brought in close proximity, or touched together. NFC operates in the unregulated (Instruments, Scientific, Medical—ISM) RF band of 13.56MHz and fully complies with existing contactless smart-card technologies, standards, and protocols such as FeliCa (Sony) and Mifare (Philips). NFC-enabled devices are interoperable with contactless smart-cards and smart-card readers conforming to these protocols. NFC range is approximately 0-20 cm (up to 8 in.) and communication is terminated either by a command from the application or when devices move out of range.

Relevant NFC applications

NFC opens up myriad new opportunities. It will enable people to effortlessly connect digital cameras, PDAs, video set-top boxes, computers and mobile phones. With NFC it is possible to connect any two devices to each other to exchange information or access content and services—easily and securely. Solution vendors argue that NFC’s intuitive operation makes it particularly easy for consumers to use, while its built-in security makes it ideal for mobile payment and financial transaction applications. However, NFC-enhanced consumer devices are also targeted at applications that exchange and store personal data such as messages, pictures, and MP3 files.

Applications for NFC are broad reaching, and the potential to support multiple applications via NFC exists. Consequently VDC has grouped NFC-related applications into three basic categories:

* Short-range, near-contact mobile transactions—applications such as access control or transport/event ticketing, where the NFC-enabled device storing the access code or ticket is presented near a reader. Mobile payment—so-called m-commerce—applications where the customer must confirm the financial transaction by entering a password or simply accepting the interaction. Also included are applications requiring simple data capture such as picking up an Internet URL from a smart label on a poster and advertisement;

* Short-range, near-contact linking transactions–connecting two NFC-enabled devices to enable a P2P transfer of data such as downloading music, exchanging images or synchronizing address books; and

* Short range, near-contact discovery transactions—customers are able to explore a device's capabilities to find out which functionalities and services are offered as NFC-enabled devices may offer more than one possible function.

In order to provide a more complete understanding of the real-world potential for NFC, here are some example applications for NFC-enabled devices to consider:

* In addition to facilitating contactless smart-card-based transactions, emerging cell-phone multimedia capabilities could be leveraged to support NFC transactions such as the purchase and download of games, music, MP3 files, videos, software, and other files to NFC-enabled handheld devices by touching NFC-enabled computers;

* Consumers are able to make online travel reservations using a PC and download reservations and/or tickets to a cell phone or PDA by bringing the mobile device in close proximity to the computer and checking-in for the trip or hotel stay by touching the handheld device to the terminal or kiosk at the departure gate or check-in station. No printing of documents, such as tickets and hotel receipts, is required;

* Posters, signs, and advertisements with RFID transponders can be scanned/read using an NFC-enabled device to download more information, make a purchase, such as paperless event tickets, and store other pertinent electronic data;

* Pictures can be taken using an NFC-enabled cell phone with an integrated digital camera. The device could then be presented/touched to a NFC-enabled television, kiosk, computer, and others, to transmit images for display and/or printing; and

* In conjunction with another wireless technology that may provide longer range and greater bandwidth, large files can be transferred between two devices, such as a laptop and a desktop, simply by touching the two NFC-enabled devices together.

Moreover, the increased use of mobile services benefiting from synergies with NFC is becoming more apparent. By integrating NFC applications with existing mobile services, mobile operators could secure new revenue opportunities by:

* Charging customers subscription fees;

* Charging retailers/service providers fees to use the system; levying fees for individual purchases or other transactions; or

* Applying service charges for adding value to the electronic cash value stored on a mobile phone via a mobile service.

What about NFC and the potential for ‘theft by RF?’ First, the relatively short read range gives customers control over NFC and the applications. NFC-enabled devices add another level of security over the traditional smart-card, as it can be powered on/off or include a passcode or voice biometric code for higher-volume transactions. For applications that require tighter security and perhaps anti-counterfeiting measures, chips can be used to store biometric information for identification.

NFC role in contactless smart-cards

Based on the ISO-14443 and ISO-15693 standards, high-frequency (13.56MHz) contact-less smart-card solutions for access control, transit fare cards, and payment/m-commerce have advanced over the last few years in the U.S., Europe, and Asia. VDC believes the recent introduction of NFC-enabled cell phones that support RFID and contactless smart-card technology will foster market development and growth across vertical and application markets. However, the adoption of NFC-enabled solutions may be restricted by investments in legacy IT infrastructure and wireless communication. Access control, transit fare cards, and cell phones with smart-card “subscriber information modules” are all relatively new technology platforms without worries of legacy infrastructure. So NFC is a viable, attractive solution for these applications. However, in terms of mobile payment/m-commerce, the challenge for contactless smart-card applications in the US has been the need to overhaul the existing hardware infrastructure.

The magstripe/dial-in combination dominates the U.S. payment processing market, yet remains costly by adding communications overhead and security vulnerabilities to every transaction. In contrast, the United Kingdom totally overhauled its infrastructure over the last 10 years to migrate from magstripe to chip-based cards, issuing an estimated 80 million cards to date.

Over the last 10 years financial contactless smart-cards have worked against the payment processing and wireless infrastructure roadblock. Meanwhile, a new de facto wireless communications infrastructure has, in effect, been built: the cell phone. Through NFC-enabled cell phones, e-commerce can be easily integrated into the wireless world. These devices provide secure storage for data, including confidential personal data, such as credit card numbers, coupons, membership data or digital rights.

However, one of the critical success factors of NFC in mobile phones will be the support of a common standard by the major mobile manufacturers—a process that is in full swing. The top three mobile vendors in the world, Nokia, Motorola and Samsung, as well as by NEC, Panasonic and Sony have already joined NFC Forum. Members of the NFC Forum also include MasterCard, Microsoft, Texas Instruments, and Visa.

Will NFC challenge Wi-Fi and Bluetooth?

Although there is always room for more wireless technology, on one hand, some argue that introducing a new standard, such as NFC, alongside 802.11 and Bluetooth may prove to be an uphill battle. On the other hand, some would argue that they do not think NFC really steps on Bluetooth or Wi-Fi. They simply do not see NFC being used to download pictures from digital cameras, or as a WLAN. NFC is demonstrably too slow. At 212 kilobits per second, NFC’s data rate is nearer a 55K modem than the 1- or 7-Mbps speeds of either Bluetooth or Wi-Fi.

And, both Sony and Philips have 802.11 and Bluetooth products, with each insisting the NFC standard would complement the more established wireless networks. Like VDC these market leaders believe there is room for a simple, less-expensive solution. In fact, with the ever-increasing complexity and cost of adding Wi-Fi and Bluetooth, price could become a major deciding factor for OEMs and manufacturers. NFC reportedly would cost 20¢ per chip. Bluetooth is expected to drop to $4-5 per radio. If the promise of af-fordable chips is realized, NFC technology could be effectively leveraged in the near-to-mid term for payment and security/access applications.

Along with affordability, ‘power drain’ issues have become of utmost importance within 802.11 and Bluetooth markets. By using a chip, rather than a battery, NFC hopes to stand out against the rest. As a result, VDC sees NFC-enabled devices connecting myriad un-powered items such as RFID tags and smart-cards within the next three to five years.

Monday, August 21, 2006

Augmented Reality - Future is unpredictable

The new technology, called augmented reality, will further blur the line between what's real and what's computer-generated by enhancing what we see, hear, feel and smell.

The new technology, called augmented reality, will further blur the line between what's real and what's computer-generated by enhancing what we see, hear, feel and smell.On the spectrum between virtual reality, which creates immersible, computer-generated environments, and the real world, augmented reality is closer to the real world. Augmented reality adds graphics, sounds, haptics and smell to the natural world as it exists. You can expect video games to drive the development of augmented reality, but this technology will have countless applications. Everyone from tourists to military troops will benefit from the ability to place computer-generated graphics in their field of vision.

Augmented reality will truly change the way we view the world. Picture yourself walking or driving down the street. With augmented-reality displays, which will eventually look much like a normal pair of glasses, informative graphics will appear in your field of view, and audio will coincide with whatever you see. These enhancements will be refreshed continually to reflect the movements of your head. In this article, we will take a look at this future technology, its components and how it will be used.

Augmenting Our World

The basic idea of augmented reality is to superimpose graphics, audio and other sense enhancements over a real-world environment in real-time. Sounds pretty simple. Besides, haven't television networks been doing that with graphics for decades? Well, sure -- but all television networks do is display a static graphic that does not adjust with camera movement. Augmented reality is far more advanced than any technology you've seen in television broadcasts, although early versions of augmented reality are starting to appear in televised races and football games, such as Racef/x and the super-imposed first down line, both created by SporTVision. These systems display graphics for only one point of view. Next-generation augmented-reality systems will display graphics for each viewer's perspective.

Augmented reality is still in an early stage of research and development at various universities and high-tech companies. Eventually, possibly by the end of this decade, we will see the first mass-marketed augmented-reality system, which one researcher calls "the Walkman of the 21st century." What augmented reality attempts to do is not only superimpose graphics over a real environment in real-time, but also change those graphics to accommodate a user's head- and eye- movements, so that the graphics always fit the perspective. Here are the three components needed to make an augmented-reality system work:

- head-mounted display

- tracking system

- mobile computing power

The goal of augmented-reality developers is to incorporate these three components into one unit, housed in a belt-worn device that wirelessly relays information to a display that resembles an ordinary pair of eyeglasses. Let's take a look at each of the components of this system.

Head-mounted Displays

Just as monitors allow us to see text and graphics generated by computers, head-mounted displays (HMDs) will enable us to view graphics and text created by augmented-reality systems. So far, there haven't been many HMDs created specifically with augmented reality in mind. Most of the displays, which resemble some type of skiing goggles, were originally created for virtual reality. There are two basic types of HMDS:

- video see-through

- optical see-through

Video see-through displays block out the wearer's surrounding environment, using small video cameras attached to the outside of the goggles to capture images. On the inside of the display, the video image is played in real-time and the graphics are superimposed on the video. One problem with the use of video cameras is that there is more lag, meaning that there is a delay in image-adjustment when the viewer moves his or her head.

Most companies who have made optical see-through displays have gone out of business. Sony makes a see-through display that some researchers use, called the Glasstron. Blair MacIntyre, director of the Augmented Environments Lab at Georgia Tech, believes that the Microvision's Virtual Retinal Display holds the most promise for an augmented-reality system. This device actually uses light to paint images onto the retina by rapidly moving the light source across and down the retina. The problem with the Microvision display is that it currently costs about $10,000. MacIntyre says that the retinal-scanning display is promising because it has the potential to be small. He imagines an ordinary-looking pair of glasses that will have a light source on the side to project images on to the retina.

Using Augmented Reality

Once researchers overcome the challenges that face them, augmented reality will likely pervade every corner of our lives. It has the potential to be used in almost every industry, including:

- Maintenance and construction - This will likely be one of the first uses for augmented reality. Markers can be attached to a particular object that a person is working on, and the augmented-reality system can draw graphics on top of it. This is a more simple form of augmented reality, since the system only has to know where the user is in reference to the object that he or she is looking at. It's not necessary to track the person's exact physical location.

- Instant information - Tourists and students could use these systems to learn more about a certain historical event. Imagine walking onto a Civil War battlefield and seeing a re-creation of historical events on a head-mounted, augmented-reality display. It would immerse you in the event, and the view would be panoramic.

- Gaming - How cool would it be to take video games outside? The game could be projected onto the real world around you, and you could, literally, be in it as one of the characters. One Australian researcher has created a prototype game that combines Quake, a popular video game, with augmented reality. He put a model of a university campus into the game's software. Now, when he uses this system, the game surrounds him as he walks across campus.

What makes Dell to Recall its batteries

To understand what's going on, it's helpful to know a little bit about how batteries work. Batteries have a negatively charged terminal and a positively charged terminal. In a battery, energy from electrochemical reactions causes electrons (negatively charged particles) to collect at the battery's negatively charged pole. Charged particles are attracted to opposite charge, so if you connect a battery to a circuit, the electrons will flow from the negative pole, through the circuit and to the battery's positively charged pole. In other words, the battery generates a moving charge, or electricity.

The exact reaction that generates the electrons varies, depending on the type of battery. In a lithium-ion battery, you'll find pressurized containers that house a coil of metal and a flammable, lithium-containing liquid. The manufacturing process creates tiny pieces of metal that float in the liquid. Manufacturers can't completely prevent these metal fragments, but good manufacturing techniques limit their size and number. The cells of a lithium-ion battery also contain separators that keep the anodes and cathodes, or positive and negative poles, from touching each other.

If the battery gets hot through use or recharging, the pieces of metal can move around, much like grains of rice in a pot of water. If a piece of metal gets too close to the separator, it can puncture the separator and cause a short circuit. There are a few possible scenarios for what can go wrong in the case of a short circuit:

* If it creates a spark, the flammable liquid can ignite, causing a fire.

* If it causes the temperature inside the battery to rise rapidly, the battery can explode due to the increased pressure.

* If it causes the temperature to rise slowly, the battery can melt, and the liquid inside can leak out.

There are several reasons why multiple laptop battery models have been recalled in the past few years. People want small, lightweight laptops that they can use for long periods. They also want their laptops to have bright screens and lots of processing power. For these reasons, laptop batteries have to be relatively small, but they also have to hold a lot of energy and last a long time.

Making lithium-ion batteries that can hold more power for a longer period requires vital components, including the separators, to be small and thin. The reduction in size makes it more likely that the batteries can fail, break, leak or short circuit.

Friday, August 18, 2006

MRAM - A New Technology in Que

Technology

Unlike conventional RAM chip technologies, data is not stored as electric charge or current flows, but by magnetic storage elements. The elements are formed from two ferromagnetic plates, each of which can hold a magnetic field, separated by a thin insulating layer. One of the two plates is a permanent magnet set to a particular polarity, the other's field will change to match that of an external field. A memory device is built from a grid of such "cells".

Reading is accomplished by measuring the electrical resistance of the cell. A particular cell is (typically) selected by powering an associated transistor, which switches current from a supply line through the cell to ground. Due to the magnetic tunnel effect, the electrical resistance of the cell changes due to the orientation of the fields in the two plates. By measuring the resulting current, the resistance inside any particular cell can be determined, and from this the polarity of the writable plate. Typically if the two plates have the same polarity this is considered to mean "0", while if the two plates are of opposite polarity the resistance will be higher and this means "1".

A newer technique, spin-torque-transfer, uses spin-aligned ("polarized") electrons to directly torque the domains. Specifically, if the electrons flowing into a layer have to change their spin, this will develop a torque that will be transferred to the nearby layer. This lowers the amount of current needed to write the cells, making it about the same as the read process. There are concerns that the "classic" type of MRAM cell will have difficulty at high densities due to the amount of current needed during writes, a problem STT avoids. For this reason, the STT proponents expect the technique to be used for devices of 65 nm and smaller. The downside is that, at present, STT needs to switch more current through the control transistor than conventional MRAM, requiring a larger transistor, and the need to maintain the spin coherence. Overall, however, the STT requires much less write current than conventional or toggle MRAM.

Overall

A wide research is still left out in this category before coming live to markets.

MRAM has similar speeds to SRAM, similar density but much lower power consumption than DRAM, and is much faster and suffers no degradation over time in comparison to Flash memory. It is this combination of features that some suggest make it the "universal memory", able to replace SRAM, DRAM and EEPROM and Flash. This also explains the huge amount of research being carried out into developing it.

Wednesday, August 16, 2006

Automatic Dependent Surveillance Broadcast

Satellite navigation and positioning took a step closer to ruling the skies

Automatic Dependent Surveillance Broadcast (ADS-B) “the backbone of the Next Generation System” — and GPS in turn supplies the data backbone for ADS-B.

In pilot phase testing for several years AT USA, ADS-B relies on GPS as the major source of data for both airliner cockpit and traffic control tower, replacing radar.

ADS-B will provide real-time cockpit displays of traffic information, both on the ground and in the air, giving pilots and controllers a better sense of what’s going on around them at any given time.

After implementing ADS - B System the accident rate has decreased to 49%

Part of the reason for this may be the increased position accuracy provided by GPS. “The ADS-B system presents an advantage over secondary radar,” . “The horizontal position accuracy does not depend on the distance of the aircraft with regard to the ground station. With radar, the farther an aircraft is from the antenna, the less accurate its computed position is.

“In the ADS-B system, the position is known with an accuracy of about 25 meters for an airborne GPS receiver and about 5 meters for a DGPS one” . “With a secondary radar, the azimuth accuracy is inferior to 0.08 degrees and the distance accuracy is inferior to 70 meters.”

How ADS-B Works. Aircraft transponders receive GPS signals and determine the aircraft’s precise location. The system converts that position into a unique digital code and combines it with other data from the aircraft’s flight monitoring system, such as the type of aircraft, its speed, its flight number, and whether it is turning, climbing, or descending. An integrity indicator derived from the GPS horizontal protection limit (HPL) is also proposed in the European version of ADS-B under testing by Eurocontrol.

The aircraft’s transponder automatically broadcasts code containing all of the data once per second. Appropriately equipped aircraft and ADS-B ground stations up to 200 miles away will receive the broadcasts. The ground stations add radar-based targets for non-ADS-B-equipped aircraft and rebroadcast the data to all equipped aircraft, in a function called Traffic Information Service-Broadcast (TIS-B). Ground stations also broadcast aircraft information from the national weather service and flight information, such as temporary flight restrictions, in the Flight Information Service-Broadcast (FIS-B).

Pilots will see the data in their cockpit traffic display screens, and air traffic controllers will see the information on displays they are already using, so little additional training would be needed for them to accept this.

Monday, August 14, 2006

Holographic Memory

Devices that use light to store and read data have been the backbone of data storage for nearly two decades. Compact discs revolutionized data storage in the early 1980s, allowing multi-megabytes of data to be stored on a disc that has a diameter of a mere 12 centimeters and a thickness of about 1.2 millimeters. In 1997, an improved version of the CD, called a digital versatile disc (DVD), was released, which enabled the storage of full-length movies on a single disc

CDs and DVDs are the primary data storage methods for music, software, personal computing and video. A CD can hold 783 megabytes of data, which is equivalent to about one hour and 15 minutes of music, but Sony has plans to release a 1.3-gigabyte (GB) high-capacity CD. A double-sided, double-layer DVD can hold 15.9 GB of data, which is about eight hours of movies. These conventional storage mediums meet today's storage needs, but storage technologies have to evolve to keep pace with increasing consumer demand. CDs, DVDs and magnetic storage all store bits of information on the surface of a recording medium. In order to increase storage capabilities, scientists are now working on a new optical storage method, called holographic memory, that will go beneath the surface and use the volume of the recording medium for storage, instead of only the surface area.

Three-dimensional data storage will be able to store more information in a smaller space and offer faster data transfer times.

Holographic memory offers the possibility of storing 1 terabyte (TB) of data in a sugar-cube-sized crystal. A terabyte of data equals 1,000 gigabytes, 1 million megabytes or 1 trillion bytes. Data from more than 1,000 CDs could fit on a holographic memory system. Most computer hard drives only hold 10 to 40 GB of data, a small fraction of what a holographic memory system might hold.

Surgical Simulators

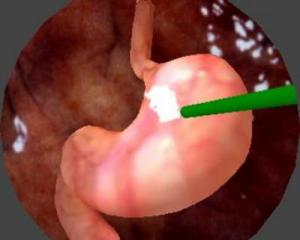

“

The most important single factor that determines the success of a surgical procedure is the skill of the surgeon,” said Suvranu De, assistant professor of mechanical, aerospace, and nuclear engineering and director of the Advanced Computational Research Lab at Rensselaer. It is therefore not surprising, he notes, that more people die each year from medical errors in hospitals than from motor vehicle accidents, breast cancer, or AIDS, according to a 2000 report by the Institute of Medicine.

The most important single factor that determines the success of a surgical procedure is the skill of the surgeon,” said Suvranu De, assistant professor of mechanical, aerospace, and nuclear engineering and director of the Advanced Computational Research Lab at Rensselaer. It is therefore not surprising, he notes, that more people die each year from medical errors in hospitals than from motor vehicle accidents, breast cancer, or AIDS, according to a 2000 report by the Institute of Medicine.De and his colleagues at Rensselaer are seeking to improve surgical training by developing a new type of virtual simulator. Based on the science of haptics — the study of sensing through touch — the new simulator will provide an immersive environment for surgeons to touch, feel, and manipulate computer-generated 3-D tissues and organs with tool handles used in actual surgery. Such a simulator could standardize the assessment of surgical skills and avert the need for cadavers and animals currently used in training, according to De.

“The sense of touch plays a fundamental role in the performance of a surgeon,” De said. “This is not a video game. People’s lives are at stake, so when training surgeons, you better be doing it well.”

Surgical simulators — even more than flight simulators — are based on intense computation. To program the realism of touch feedback from a surgical probe navigating through soft tissue, the researchers must develop efficient computer models that perform 30 times faster than real-time graphics, solving complex sets of partial differential equations about a thousand times a second, De said.

The major challenge to current technologies is the simulation of soft biological tissues, according to De. Such tissues are heterogeneous and viscoelastic, meaning they exhibit characteristics of both solids and liquids — similar to chewing gum or silly putty. And surgical procedures such as cutting and cauterizing are almost impossible to simulate with traditional techniques.

To overcome these barriers, De’s group has developed a new computational tool called the Point-Associated Finite Field (PAFF) approach, which models human tissue as a collection of particles with distinct, overlapping zones of influence that produce coordinated, elastic movements. A single point in space models each spot, while its relationship to nearby points is determined by the equations of physics. The localized points migrate along with the tip of the virtual instrument, much like a roving swarm of bees.

Sunday, August 13, 2006

High Altitude Broadband Technology

The CAPANINA project, which uses balloons, airships or unmanned solar-powered planes as high-altitude platforms (HAPs) to relay wireless and optical communications, is due to finish its main research at the end of October.

The consortium behind the project will open York HAP Week, a conference from 23 to 27 October, which will showcase the applications of HAPs, as a springboard for future development in this new high-tech sector.

The CAPANINA Final Exhibition will open the conference by highlighting the achievements of the project, which received funding from the EU under its Broadband-for-All, FP6 programme.

The consortium, drawn from Europe and Japan, has demonstrated how the system could bring low-cost broadband connections to remote areas and even to high-speed trains. It promises data rates 2,000 times faster than via a traditional modem and 100 times faster than today's 'wired' ADSL broadband.

CAPANINA's Principal Scientific Officer Dr David Grace said: "The potential of the system is huge, with possible applications ranging from communications for disaster management and homeland security, to environmental monitoring and providing broadband for developing countries. So far, we have considered a variety of aerial platforms, including airships, balloons, solar-powered unmanned planes and normal aeroplanes -- the latter will probably be particularly suited to establish communications very swiftly in disaster zones."

The final experimental flight will use a US-built Unmanned Aerial Vehicle (UAV) and will take place in Arizona days before the York HAP Week conference at the city's historic King's Manor.

Following the CAPANINA event, a HAP Application Symposium led by Dr Jorge Pereira, of the Information Society and Media Directorate-General of the European Commission, will provide a forum for leading experts to illustrate the potential of HAPs to opinion formers and telecommunications providers.

Completing the week will be the first HAPCOS Workshop, featuring the work of leading researchers from around Europe. It will focus on wireless and optical communications from HAPs, as well as the critically important field of HAP vehicle development.

The Chair of HAPCOS, Tim Tozer, of the University of York's Department of Electronics, said: "There are a number of projects worldwide that are proving the technology and we want to convince the telecommunications and the wider community of its potential. We are particularly keen to attract aerial vehicle providers."

The CAPANINA and HAPCOS activities have helped to forge collaborative links with more than 25 countries, including many from Europe, as well as Japan, South Korea, China, Malaysia and USA. They are seeking to develop existing partnerships and forge new ones, with researchers, entrepreneurs, industry, governments as well as end users

Magnetic Memory

Physicists at the University of Leeds have embarked upon an ambitious multimillion-dollar project to develop new materials that they hope will allow computers to become faster and smaller. Instead of using electrical charges to represent data; processors and memory made of the new materials would rely on magnetism. The researchers claim that using magnetism in place of electricity could eventually provide an excellent basis for quantum computing, an area of study that other researchers are also exploring.

Imagine your computer's operating system loading the very instant that you switch it on, or having a computer that is more efficient because its memory is not having to replenish thousands of lost electrical charges every second. According to the researchers involved, these improvements and more are completely feasible, as the basic technology is already being used in some memory chips and high performance hard disks found in items like iPods. "The iPod wouldn't have been possible without the high-speed drive inside it. In the past, simple coils of wire were used but the individual bits of data are now so tiny that exquisitely sensitive detectors are needed to get the data back," said researcher Dr. Chris Marrows. Using magnetism in microelectronics in such ways is increasingly referred to as spintronics.

Led by Leeds' Professor Brian Hickey, a multi-institutional group of researchers are looking for new ways in which to exploit spintronics, and to further understand the properties of magnetism. The team has already established that magnetism could be used to manipulate the flow of electrons in a component, which means that a chip could reconfigure itself in the most effective way depending upon a given calculation. Spintronics-type memory is superior to flash memory chips used in digital cameras, as the write-speed is much faster and it will not degrade over time.

The Leeds researchers are currently using a "sputter" machine to make spintronic materials one atom-thin layer at a time. "We are in effect spray painting with atoms in the sputter machine. It gives us the control to build materials layer by layer. It's the same process - but much more clean and controlled - which causes thin gray layers of gunk to form at the ends of a fluorescent light bulb," said Dr. Marrows.

As the sophistication of spintronic devices increases, Dr. Marrows is optimistic about eventually using electrons for the storage and processing of information. "Ultimately in the extreme case of operating on the single spin of an electron, spintronics will be an excellent basis for quantum computing," he enthused.

Tracking Systems

The Basics - of Tracking Systems

Location tracking is not one, single technology. Rather, it is the convergence of several technologies that can be merged to create systems that track inventory, livestock or vehicle fleets. Similar systems can be created to deliver location-based services to wireless devices.

Current technologies being used to create location-tracking and location-based systems include:

* Geographic Information Systems (GIS) - For large-scale location-tracking systems, it is necessary to capture and store geographic information. Geographic information systems can capture, store, analyze and report geographic information.

* Global Positioning System (GPS) - A constellation of 27 Earth-orbiting satellites (24 in operation and three extras in case one fails). A GPS receiver, like the one in your mobile phone, can locate four or more of these satellites, figure out the distance to each, and deduce your location through trilateration. For trilateration to work, it must have a clear line of sight to these four or more satellites. GPS is ideal for outdoor positioning, such as surveying, farming, transportation or military use (for which it was originally designed). See How GPS Receivers Work for more information.

* Radio Frequency Identification (RFID) - Small, battery-less microchips that can be attached to consumer goods, cattle, vehicles and other objects to track their movements. RFID tags are passive and only transmit data if prompted by a reader. The reader transmits radio waves that activate the RFID tag. The tag then transmits information via a pre-determined radio frequency. This information is captured and transmitted to a central database. Among possible uses for RFID tags are a replacement for traditional UPC bar codes. See How RFIDs Work for more information.

* Wireless Local Area Network (WLAN) - Network of devices that connect via radio frequency, such as 802.11b. These devices pass data over radio waves and provide users with a network with a range of 70 to 300 feet (21.3 to 91.4 meters).

Any location tracking or location-based service system will use one or a combination of these technologies. The system requires that a node or tag be placed on the object, animal or person being tracked. For example, the GPS receiver in a cell phone or an RFID tag on a DVD can be used to track those devices with a detection system such as GPS satellites or RFID receivers.

Saturday, August 12, 2006

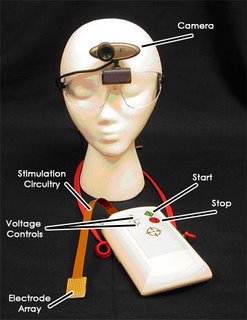

Brainport - really astonishing

Well, not exactly through her tongue, but the device in her mouth sent visual input through her tongue in much the same way that seeing individuals receive visual input through the eyes. In both cases, the initial sensory input mechanism -- the tongue or the eyes -- sends the visual data to the brain, where that data is processed and interpreted to form images. What we're talking about here is electrotactile stimulation for sensory augmentation or substitution, an area of study that involves using encoded electric current to represent sensory information -- information that a person cannot receive through the traditional channel -- and applying that current to the skin, which sends the information to the brain. The brain then learns to interpret that sensory information as if it were being sent through the traditional channel for such data. In the 1960s and '70s, this process was the subject of ground-breaking research in sensory substitution at the Smith-Kettlewell Institute led by Paul Bach-y-Rita, MD, Professor of Orthopedics and Rehabilitation and Biomedical Engineering at the University of Wisconsin, Madison. Now it's the basis for Wicab's BrainPort technology (Dr. Bach-y-Rita is also Chief Scientist and Chairman of the Board of Wicab).

You astonish how it happens

The multiple channels that carry sensory information to the brain, from the eyes, ears and skin, for instance, are set up in a similar manner to perform similar activities. All sensory information sent to the brain is carried by nerve fibers in the form of patterns of impulses, and the impulses end up in the different sensory centers of the brain for interpretation. To substitute one sensory input channel for another, you need to correctly encode the nerve signals for the sensory event and send them to the brain through the alternate channel. The brain appears to be flexible when it comes to interpreting sensory input. You can train it to read input from, say, the tactile channel, as visual or balance information, and to act on it accordingly. In JS Online's "Device may be new pathway to the brain," University of Wisconsin biomedical engineer and BrainPort technology co-inventor Mitch Tyler states, "It's a great mystery as to how that process takes place, but the brain can do it if you give it the right information.

The concepts at work behind electrotactile stimulation for sensory substitution are complex, and the mechanics of implementation are no less so. The idea is to communicate non-tactile information via electrical stimulation of the sense of touch. In practice, this typically means that an array of electrodes receiving input from a non-tactile

information source (a camera, for instance) applies small, controlled, painless currents (some subjects report it feeling something like soda bubbles) to the skin at precise locations according to an encoded pattern. The encoding of the electrical pattern essentially attempts to mimic the input that would normally be received by the non-functioning sense. So patterns of light picked up by a camera to form an image, replacing the perception of the eyes, are converted into electrical pulses that represent those patterns of light. When the encoded pulses are applied to the skin, the skin is actually receiving image data. According to Dr. Kurt Kaczmarek, BrainPort technology co-inventor and Senior Scientist with the University of Wisconsin Department of Orthopedics and Rehabilitation Medicine, what happens next is that "the electric field thus generated in subcutaneous tissue directly excites the afferent nerve fibers responsible for normal, mechanical touch sensations." Those nerve fibers forward their image-encoded touch signals to the tactile-sensory area of the cerebral cortex, the parietal lobe.

information source (a camera, for instance) applies small, controlled, painless currents (some subjects report it feeling something like soda bubbles) to the skin at precise locations according to an encoded pattern. The encoding of the electrical pattern essentially attempts to mimic the input that would normally be received by the non-functioning sense. So patterns of light picked up by a camera to form an image, replacing the perception of the eyes, are converted into electrical pulses that represent those patterns of light. When the encoded pulses are applied to the skin, the skin is actually receiving image data. According to Dr. Kurt Kaczmarek, BrainPort technology co-inventor and Senior Scientist with the University of Wisconsin Department of Orthopedics and Rehabilitation Medicine, what happens next is that "the electric field thus generated in subcutaneous tissue directly excites the afferent nerve fibers responsible for normal, mechanical touch sensations." Those nerve fibers forward their image-encoded touch signals to the tactile-sensory area of the cerebral cortex, the parietal lobe.After training in laboratory tests, blind subjects were able to perceive visual traits like looming, depth, perspective, size and shape. The subjects could still feel the pulses on their tongue, but they could also perceive images generated from those pulses by their brain. The subjects perceived the objects as "out there" in front of them, separate from their own bodies. They could perceive and identify letters of the alphabet. In one case, when blind mountain climber Erik Weihenmayer was testing out the device, he was able to locate his wife in a forest. One of the most common questions at this point is, "Are they really seeing?" That all depends on how you define vision. If seeing means you can identify the letter "T" somewhere outside yourself, sense when that "T" is getting larger, smaller, changing orientation or moving farther away from your own body, then they're really seeing. One study that conducted PET brain scans of congenitally blind people while they were using the BrainPort vision device found that after several sessions with BrainPort, the vision centers of the subjects' brains lit up when visual information was sent to the brain through the tongue. If "seeing" means there's activity in the vision center of the cerebral cortex, then the blind subjects are really seeing.

The BrainPort test results are somewhat astonishing and lead many to wonder about the scope of applications for the technology. In the next section, we'll see which BrainPort applications Wicab is currently focusing on in clinical trials, what other applications it foresees for the technology and how close it is to commercially launching a consumer-friendly version of the device.

Friday, August 11, 2006

Biometric Techniques

Iris Scanning

Iris scanning can seem very futuristic, but at the heart of the system is a simple CCD digital camera. It uses both visible and near-infrared light to take a clear, high-contrast picture of a person's iris. With near-infrared light, a person's pupil is very black, making it easy for the computer to isolate the pupil and iris.

Iris scanning can seem very futuristic, but at the heart of the system is a simple CCD digital camera. It uses both visible and near-infrared light to take a clear, high-contrast picture of a person's iris. With near-infrared light, a person's pupil is very black, making it easy for the computer to isolate the pupil and iris.When you look into an iris scanner, either the camera focuses automatically or you use a mirror or audible feedback from the system to make sure that you are positioned correctly. Usually, your eye is 3 to 10 inches from the camera. When the camera takes a picture, the computer locates:

- The center of the pupil

- The edge of the pupil

- The edge of the iris

- The eyelids and eyelashes

It then analyzes the patterns in the iris and translates them into a code.

Iris scanners are becoming more common in high-security applications because people's eyes are so unique (the chance of mistaking one iris code for another is 1 in 10 to the 78th power. They also allow more than 200 points of reference for comparison, as opposed to 60 or 70 points in fingerprints.

The iris is a visible but protected structure, and it does not usually change over time, making it ideal for biometric identification. Most of the time, people's eyes also remain unchanged after eye surgery, and blind people can use iris scanners as long as their eyes have irises. Eyeglasses and contact lenses typically do not interfere or cause inaccurate readings.

Vein Geometry

As with irises and fingerprints, a person's veins are completely unique. Twins don't have identical veins, and a person's veins differ between their left and right sides. Many veins are not visible through the skin, making them extremely difficult to counterfeit or tamper with. Their shape also changes very little as a person ages.

As with irises and fingerprints, a person's veins are completely unique. Twins don't have identical veins, and a person's veins differ between their left and right sides. Many veins are not visible through the skin, making them extremely difficult to counterfeit or tamper with. Their shape also changes very little as a person ages.To use a vein recognition system, you simply place your finger, wrist, palm or the back of your hand on or near the scanner. A camera takes a digital picture using near-infrared light. The hemoglobin in your blood absorbs the light, so veins appear black in the picture. As with all the other biometric types, the software creates a reference template based on the shape and location of the vein structure.

Scanners that analyze vein geometry are completely different from vein scanning tests that happen in hospitals. Vein scans for medical purposes usually use radioactive particles. Biometric security scans, however, just use light that is similar to the light that comes from a remote control. NASA has lots more information on taking pictures with infrared light.

n addition to the potential for invasions of privacy, critics raise several concerns about biometrics, such as:

- Over reliance: The perception that biometric systems are foolproof might lead people to forget about daily, common-sense security practices and to protect the system's data.

- Accessibility: Some systems can't be adapted for certain populations, like elderly people or people with disabilities.

- Interoperability: In emergency situations, agencies using different systems may need to share data, and delays can result if the systems can't communicate with each other.

Motes - Tiny and powerful

There are thousands of different ways that motes might be used, and as people get familiar with the concept they come up with even more. It is a completely new paradigm for distributed sensing and it is opening up a fascinating new way to look at computers.

The Basic Idea

The "mote" concept creates a new way of thinking about computers, but the basic idea is pretty simple:

The "mote" concept creates a new way of thinking about computers, but the basic idea is pretty simple:The core of a mote is a small, low-cost, low-power computer.

The computer monitors one or more sensors. It is easy to imagine all sorts of sensors, including sensors for temperature, light, sound, position, acceleration, vibration, stress, weight, pressure, humidity, etc. Not all mote applications require sensors, but sensing applications are very common.

The computer connects to the outside world with a radio link. The most common radio links allow a mote to transmit at a distance of something like 10 to 200 feet (3 to 61 meters). Power consumption, size and cost are the barriers to longer distances. Since a fundamental concept with motes is tiny size (and associated tiny cost), small and low-power radios are normal.

Motes can either run off of batteries, or they can tap into the power grid in certain applications. As motes shrink in size and power consumption, it is possible to imagine solar power or even something exotic like vibration power to keep them running.

All of these parts are packaged together in the smallest container possible. In the future, people imagine shrinking motes to fit into something just a few millimeters on a side. It is more common for motes today, including batteries and antenna, to be the size of a stack of five or six quarters, or the size of a pack of cigarettes. The battery is usually the biggest part of the package right now. Current motes, in bulk, might cost something on the order of $25, but prices are falling.

It is hard to imagine something as small and innocuous as a mote sparking a revolution, but that's exactly what they have done. We'll look at a number of possible applications in the next section.

How Motes Work

It is possible to think of motes as lone sensors. For example:

You could embed motes in bridges when you pour the concrete. The mote could have a sensor on it that can detect the salt concentration within the concrete. Then once a month you could drive a truck over the bridge that sends a powerful magnetic field into the bridge. The magnetic field would allow the motes, which are burried within the concrete of the bridge, to power on and transmit the salt concentration. Salt (perhaps from deicing or ocean spray) weakens concrete and corrodes the steel rebar that strengthens the concrete. Salt sensors would let bridge maintenance personnel gauge how much damage salt is doing. Other possible sensors embedded into the concrete of a bridge might detect vibration, stress, temperature swings, cracking, etc., all of which would help maintenance personnel spot problems long before they become critical.

lot of ideas we can apply However, much of the greatest excitement about motes comes from the idea of using large numbers of motes that communicate with each other and form ad hoc network

Ad hoc Networks

The Defense Advanced Research Projects Agency (DARPA) was among the original patrons of the mote idea. One of the initial mote ideas implemented for DARPA allows motes to sense battlefield conditions.

For example, imagine that a commander wants to be able to detect truck movement in a remote area. An airplane flies over the area and scatters thousands of motes, each one equipped with a magnetometer, a vibration sensor and a GPS receiver. The battery-operated motes are dropped at a density of one every 100 feet (30 meters) or so. Each mote wakes up, senses its position and then sends out a radio signal to find its neighbors.

All of the motes in the area create a giant, amorphous network that can collect data. Data funnels through the network and arrives at a collection node, which has a powerful radio able to transmit a signal many miles. When an enemy truck drives through the area, the motes that detect it transmit their location and their sensor readings. Neighboring motes pick up the transmissions and forward them to their neighbors and so on, until the signals arrive at the collection node and are transmitted to the commander. The commander can now display the data on a screen and see, in real time, the path that the truck is following through the field of motes. Then a remotely-piloted vehicle can fly over the truck, make sure it belongs to the enemy and drop a bomb to destroy it.

This concept of ad hoc networks -- formed by hundreds or thousands of motes that communicate with each other and pass data along from one to another -- is extremely powerful.

Blink Technology - New Credit Card System

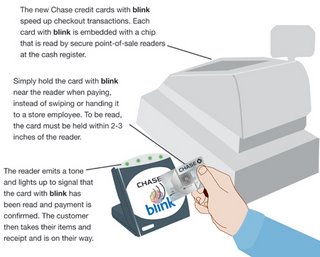

What is Blink?

The new blink credit card is just like a regular credit card in many ways. It has the account holder's name and the account number embossed on the front of the card. On the back is a magnetic strip containing the account information, so the card can be used anywhere regular credit cards can be used. The key difference is inside the card.

Embedded within the blink card is a small RFID (radio frequency identification) microchip. When the chip is close enough to the right kind of terminal, the terminal can get information from the chip -- in this case, the account number and name. So instead of swiping the magnetic strip on the card through a standard credit-card reader, card holders simply hold their card a few inches from the blink terminal. The card never leaves the card holder's hand.

As with standard credit-card transactions, the terminal then sends the information via phone line to the bank that issued the card and checks the account balance to see if there is room on the card for the purchase. If there is, the bank issues a confirmation number to the terminal, the sale is approved and the card holder is on his or her way.

As with standard credit-card transactions, the terminal then sends the information via phone line to the bank that issued the card and checks the account balance to see if there is room on the card for the purchase. If there is, the bank issues a confirmation number to the terminal, the sale is approved and the card holder is on his or her way.RFID and Blink

Credit cards using blink technology employ RFID. There are many forms of RFID. For example, Wal-Mart has experimented with putting RFID chips on their merchandise so they can track inventory automatically.

Blink uses a specific kind of RFID developed under International Standard 14443. ISO 14443 has certain features that make it particularly well-suited to applications involving sensitive information, such as credit-card account numbers:

* Data transmitted by ISO 14443 chips is encrypted.

* The transmission range is designed to be very short, about 4 inches (10 cm) or less.

As a result, ISO 14443 is used in more than 80 percent of contactless credit-card transactions worldwide. Recent additions to the standard allow ISO 14443 technology to store biometric data such as fingerprints and face photos for use in passports and other security documents.

To understand how the contactless card and terminal work together, first we have to talk about induction. In 1831, it was already known that an electric current produced a magnetic field. That year, Michael Faraday discovered that it worked the other way around as well -- a magnetic field could produce an electric current in wires that passed through the field. He called this induction, and the law that governs it is known as Faraday's Law.

In some cases, induction is something electrical engineers try to avoid. For instance, if the electric lines in your neighborhood run too close to the phone lines, the magnetic field produced by the electric lines can generate voltage in the phone lines. This voltage shows up as "noise" in the signal passing through the phone lines. Shielding and proper orientation of the lines can prevent this interference.

For RFID devices such as blink cards, engineers have harnessed induction. Each blink card contains a small microchip as well as a wire loop. The blink terminal gives off a magnetic field in the area around it. When a blink card gets close enough, the wire loop enters the terminal's field, causing induction. The voltage generated by the induction powers the microchip. Without this process, called inductive coupling, each blink card would have to carry its own power supply in the form of a battery, which would add bulk and weight and could eventually run out of power. Because the power is supplied by the terminal, the blink system is known as a passive system.

Once the blink card has power flowing to it from the terminal, the processor then transmits information to the terminal at a frequency of 13.56 MHz. This frequency was chosen for its suitability for inductive coupling, it's resistance to environmental interference and its low absorption rate by human tissue. Instruction sets built into the processor encrypt the data during transmission.

About Security

Whenever credit cards are involved, people are worried about security. Sending the credit-card data to a terminal via a radio signal might not seem very secure. But when the process operates properly, it's actually more secure than using a magnetic-strip credit card. The information on a magnetic strip can be read, altered or duplicated using a variety of devices that have been available for years. The encryption built into a blink card make this particular form of theft impossible. Also, using the blink card allows the user to keep the card in his or her hand the entire time. This could prevent someone from seeing the account number and name on the card.

A signature is not required when using a blink card, which leads to security concerns. Chase feels that the encryption and other security features built into blink make the card secure without the need for a signature, which would slow down the transaction and defeat the purpose of blink altogether. They even suggest that it makes the transaction safer, since the clerk never sees the card or account number. The problem, of course, is that if someone gets his or her hands on your blink card, there's no need to verify anything at all in order to use it in a store. But Blink users are no more accountable for fraudulent charges than any other credit-card user.

There have been reports of problems in the testing of contactless RFID credit cards, however, that lead to additional security concerns. In some cases, if two or more terminals were close together, not only did both terminals read the card, but the read range of each terminal increased to as much as 30 feet (9 m). Even if the terminal is operating within the proper range of 4 inches, some people are worried that they could accidentally walk too close to a terminal and end up paying for someone else's purchase. The simplest safeguard against this is probably merchants positioning the terminals in such a way as to make this unlikely.

The worst case scenario involves someone getting their hands on a blink terminal and modifying it to increase the range. Potentially, someone could set up the terminal at a crowded location and collect the credit-card data of anyone who came within the terminal's read range. This probably won't be a concern at first, since few terminals will be available, but if the technology matures, blink terminals could fall into the hands of criminals.

There is a way to protect blink cards from giving out their information to unauthorized terminals, either accidentally or due to criminal activity. If the card is placed in a sleeve lined with metal, it will not function. If contactless credit cards become popular, expect to see "RFID blocking" wallets and purses on the market.

Sunday, August 06, 2006

Blu-Ray Disks

While current optical disc technologies such as DVD, DVD±R, DVD±RW, and DVD-RAM rely on a red laser to read and write data, the new format uses a blue-violet laser instead, hence the name Blu-ray. Despite the different type of lasers used, Blu-ray products can easily be made backwards compatible with CDs and DVDs through the use of a BD/DVD/CD compatible optical pickup unit. The benefit of using a blue-violet laser (405nm) is that it has a shorter wavelength than a red laser (650nm), which makes it possible to focus the laser spot with even greater precision. This allows data to be packed more tightly and stored in less space, so it's possible to fit more data on the disc even though it's the same size as a CD/DVD. This together with the change of numerical aperture to 0.85 is what enables Blu-ray Discs to hold 25GB/50GB.

Blu-ray is currently supported by more than 170 of the world's leading consumer electronics, personal computer, recording media, video game and music companies. The format also has broad support from the major movie studios as a successor to today's DVD format. Seven of the eight major movie studios have already announced titles for Blu-ray, including Warner, Paramount, Fox, Disney, Sony, MGM and Lionsgate. The initial line-up is expected to consist of over 100 titles and include recent hits as well as classics such as Batman Begins, Desperado, Fantastic Four, Fifth Element, Hero, Ice Age, Kill Bill, Lethal Weapon, Mission Impossible, Ocean's Twelve, Pirates of the Caribbean, Reservoir Dogs, Robocop, and The Matrix. Many studios have also announced that they will begin releasing new feature films on Blu-ray Disc day-and-date with DVD, as well as a continuous slate of catalog titles every month.

Saturday, August 05, 2006

Finger Print Scanner getting next face

A scanner that reads a fingerprint as well as the unique pattern of tissue and blood content beneath the skin could offer higher reliability for biometric security.

The paper-thin sensor, being developed by Nanoident Technologies, based in Linz, Austria, could be on the market in one to two years as a safe and secure way for accessing sensitive data on smart cards, cell phones, and other electronic devices.

According to Klaus Schroeter, CEO and founder of Nanoident, conventional finger scanners typically read just the pattern of the surface print. That has two big disadvantages: the recognition accuracy is only about 97 percent and the scanners can be fooled by fake fingers posing as the real deal.

But a scanner that captures both the surface and subsurface structures improves the recognition accuracy to 99 percent and is nearly impossible to fool.

"If you can measure the hemoglobin content, you can say this is a live finger," said Schroeter.

The flexible sensors — just two millimeters wide and 12 to 15 mm long — are produced at a cost of one to two dollars apiece using inkjet technology. A semi-conducting, ink-like liquid is printed onto a polymer or glass substrate to form light emitters, light sensors, and the circuitry necessary to process the electronic signals.

Similar components made with rigid silicon semiconductors can run between three and ten dollars apiece.

The light emitters on Nanoident's sensor illuminate the finger with different wavelengths of light: blue for the surface print and infrared to penetrate three to four mm deep into the finger's substructure.

The light sensors measure the amount of light reflected back and a proprietary software algorithm produces the patterns unique to the finger.

When incorporated into a smart card or electronic device, such as a personal digital assistant, the user would first record their unique print and then store it into the device's memory.

Later, when wanting to access sensitive information, the user would place or swipe their finger over the sensor to confirm their identity. If the print did not match what was saved in the system, the person would not gain access.

"It's a very advantageous approach," said Arun Ross, assistant professor of computer science and electrical engineering at West Virginia University in Morgantown and a researcher at the Center for Identification Technology Research.

But, he said, gathering more information puts greater demands on the system to cancel out unwanted "noise" — such as physical flaws in the finger or environmental variables including humidity — and combine the data into a meaningful pattern.

"More information does not translate into accuracy unless there is fusion," he said.

Schroeter said the company will have its first working prototypes by the middle of next year and is currently working a new sensor that would be incorporated into the screen of an electronic device.

FCC Supports Broadband over Power Lines

The Federal Communications Commission decided Aug. 3 to reaffirm its stance on the deployment of broadband-over-power-line technology. In a Memorandum Opinion and Order adopted by the FCC today, the commissioners affirmed that BPL providers have the right to provide data access using power transmission lines, provided they don't interfere with existing radio services.

By adopting this order, the FCC rejected requests by several groups, including the amateur radio community, the aviation industry and broadcasters, to either limit the service or to disallow it completely. However, the FCC did adopt provisions to protect some aeronautical stations and to protect radio astronomy sites from interference.

In the statements released by the commissioners, it was clear that the FCC sees BPL technology as a critical move in the effort to reduce the grip of the current broadband duopoly in the United States, and as a vital step toward serving areas of the United States that currently have no broadband access at all, including residents of rural and inner city areas.

FCC Chairman Kevin Martin said all of the commission members would like to see some non-duopoly pipes bring broadband access to hard-to-reach Americans. "This technology holds great promise as a ubiquitous broadband solution that would offer a viable alternative to cable, digital subscriber line, fiber and wireless broadband solutions," Martin said in his prepared statement.

"Moreover, BPL has unique advantages for home networking because consumers can simply plug a device into their existing electrical outlets to achieve broadband connectivity," he said.

Martin was joined in his hopeful comments by other commissioners. Commissioner Michael Copps said that the United States was already behind the game in the adoption of broadband, and he said that BPL might help solve the problem.

DNA Computers - Future is astonishing

DNA ------ Makes it possible for them

Even as you read this article, computer chip manufacturers are furiously racing to make the next microprocessor that will topple speed records. Sooner or later, though, this competition is bound to hit a wall. Microprocessors made of silicon will eventually reach their limits of speed and miniaturization. Chip makers need a new material to produce faster computing speeds.

Even as you read this article, computer chip manufacturers are furiously racing to make the next microprocessor that will topple speed records. Sooner or later, though, this competition is bound to hit a wall. Microprocessors made of silicon will eventually reach their limits of speed and miniaturization. Chip makers need a new material to produce faster computing speeds.You won't believe where scientists have found the new material they need to build the next generation of microprocessors. Millions of natural supercomputers exist inside living organisms, including your body. DNA (deoxyribonucleic acid) molecules, the material our genes are made of, have the potential to perform calculations many times faster than the world's most powerful human-built computers. DNA might one day be integrated into a computer chip to create a so-called biochip that will push computers even faster. DNA molecules have already been harnessed to perform complex mathematical problems.

While still in their infancy, DNA computers will be capable of storing billions of times more data than your personal computer. In this article, you'll learn how scientists are using genetic material to create nano-computers that might take the place of silicon-based computers in the next decade.

A Fledgling Technology

DNA computers can't be found at your local electronics store yet. The technology is still in development, and didn't even exist as a concept a decade ago. In 1994, Leonard Adleman introduced the idea of using DNA to solve complex mathematical problems. Adleman, a computer scientist at the University of Southern California, came to the conclusion that DNA had computational potential after reading the book "Molecular Biology of the Gene," written by James Watson, who co-discovered the structure of DNA in 1953. In fact, DNA is very similar to a computer hard drive in how it stores permanent information about your genes.

Adleman is often called the inventor of DNA computers. His article in a 1994 issue of the journal Science outlined how to use DNA to solve a well-known mathematical problem, called the directed Hamilton Path problem, also known as the "traveling salesman" problem. The goal of the problem is to find the shortest route between a number of cities, going through each city only once. As you add more cities to the problem, the problem becomes more difficult. Adleman chose to find the shortest route between seven cities.

You could probably draw this problem out on paper and come to a solution faster than Adleman did using his DNA test-tube computer. Here are the steps taken in the Adleman DNA computer experiment:

1. Strands of DNA represent the seven cities. In genes, genetic coding is represented by the letters A, T, C and G. Some sequence of these four letters represented each city and possible flight path.

2. These molecules are then mixed in a test tube, with some of these DNA strands sticking together. A chain of these strands represents a possible answer.

3. Within a few seconds, all of the possible combinations of DNA strands, which represent answers, are created in the test tube.

4. Adleman eliminates the wrong molecules through chemical reactions, which leaves behind only the flight paths that connect all seven cities.

The success of the Adleman DNA computer proves that DNA can be used to calculate complex mathematical problems. However, this early DNA computer is far from challenging silicon-based computers in terms of speed. The Adleman DNA computer created a group of possible answers very quickly, but it took days for Adleman to narrow down the possibilities. Another drawback of his DNA computer is that it requires human assistance. The goal of the DNA computing field is to create a device that can work independent of human involvement.

Three years after Adleman's experiment, researchers at the University of Rochester developed logic gates made of DNA. Logic gates are a vital part of how your computer carries out functions that you command it to do. These gates convert binary code moving through the computer into a series of signals that the computer uses to perform operations. Currently, logic gates interpret input signals from silicon transistors, and convert those signals into an output signal that allows the computer to perform complex functions.

The Rochester team's DNA logic gates are the first step toward creating a computer that has a structure similar to that of an electronic PC. Instead of using electrical signals to perform logical operations, these DNA logic gates rely on DNA code. They detect fragments of genetic material as input, splice together these fragments and form a single output. For instance, a genetic gate called the "And gate" links two DNA inputs by chemically binding them so they're locked in an end-to-end structure, similar to the way two Legos might be fastened by a third Lego between them. The researchers believe that these logic gates might be combined with DNA microchips to create a breakthrough in DNA computing.

DNA computer components -- logic gates and biochips -- will take years to develop into a practical, workable DNA computer. If such a computer is ever built, scientists say that it will be more compact, accurate and efficient than conventional computers. In the next section, we'll look at how DNA computers could surpass their silicon-based predecessors, and what tasks these computers would perform

A Successor to Silicon

Silicon microprocessors have been the heart of the computing world for more than 40 years. In that time, manufacturers have crammed more and more electronic devices onto their microprocessors. In accordance with Moore's Law, the number of electronic devices put on a microprocessor has doubled every 18 months. Moore's Law is named after Intel founder Gordon Moore, who predicted in 1965 that microprocessors would double in complexity every two years. Many have predicted that Moore's Law will soon reach its end, because of the physical speed and miniaturization limitations of silicon microprocessors.

DNA computers have the potential to take computing to new levels, picking up where Moore's Law leaves off. There are several advantages to using DNA instead of silicon:

* As long as there are cellular organisms, there will always be a supply of DNA.

* The large supply of DNA makes it a cheap resource.

* Unlike the toxic materials used to make traditional microprocessors, DNA biochips can be made cleanly.

* DNA computers are many times smaller than today's computers.

DNA's key advantage is that it will make computers smaller than any computer that has come before them, while at the same time holding more data. One pound of DNA has the capacity to store more information than all the electronic computers ever built; and the computing power of a teardrop-sized DNA computer, using the DNA logic gates, will be more powerful than the world's most powerful supercomputer. More than 10 trillion DNA molecules can fit into an area no larger than 1 cubic centimeter (0.06 cubic inches). With this small amount of DNA, a computer would be able to hold 10 terabytes of data, and perform 10 trillion calculations at a time. By adding more DNA, more calculations could be performed.

Unlike conventional computers, DNA computers perform calculations parallel to other calculations. Conventional computers operate linearly, taking on tasks one at a time. It is parallel computing that allows DNA to solve complex mathematical problems in hours, whereas it might take electrical computers hundreds of years to complete them.

The first DNA computers are unlikely to feature word processing, e-mailing and solitaire programs. Instead, their powerful computing power will be used by national governments for cracking secret codes, or by airlines wanting to map more efficient routes. Studying DNA computers may also lead us to a better understanding of a more complex computer -- the human brain.

Thursday, August 03, 2006

Touchscreen Monitors

Touchscreen monitors have become more and more commonplace as their price has steadily dropped over the past decade. There are three basic systems that are used to recognize a person's touch:

* Resistive

* Capacitive

* Surface acoustic wave

The resistive system consists of a normal glass panel that is covered with a conductive and a resistive metallic layer. These two layers are held apart by spacers, and a scratch-resistant layer is placed on top of the whole setup. An electrical current runs through the two layers while the monitor is operational. When a user touches the screen, the two layers make contact in that exact spot. The change in the electrical field is noted and the coordinates of the point of contact are calculated by the computer. Once the coordinates are known, a special driver translates the touch into something that the operating system can understand, much as a computer mouse driver translates a mouse's movements into a click or a drag.

In the capacitive system, a layer that stores electrical charge is placed on the glass panel of the monitor. When a user touches the monitor with his or her finger, some of the charge is transferred to the user, so the charge on the capacitive layer decreases. This decrease is measured in circuits located at each corner of the monitor. The computer calculates, from the relative differences in charge at each corner, exactly where the touch event took place and then relays that information to the touchscreen driver software. One advantage that the capacitive system has over the resistive system is that it transmits almost 90 percent of the light from the monitor, whereas the resistive system only transmits about 75 percent. This gives the capacitive system a much clearer picture than the resistive system.